Using MLAAD for Source Tracing of Audio Deepfakes

Source tracing involves identifying the origin of synthetic audio samples. For example, given a set of audio deepfakes circulating on social media, a crucial question is whether these were generated using the same text-to-speech (TTS) model. If they were, it could indicate a single source, potentially revealing coordinated misinformation efforts. This capability is essential for holding developers of TTS and instant voice cloning tools accountable for misuse.

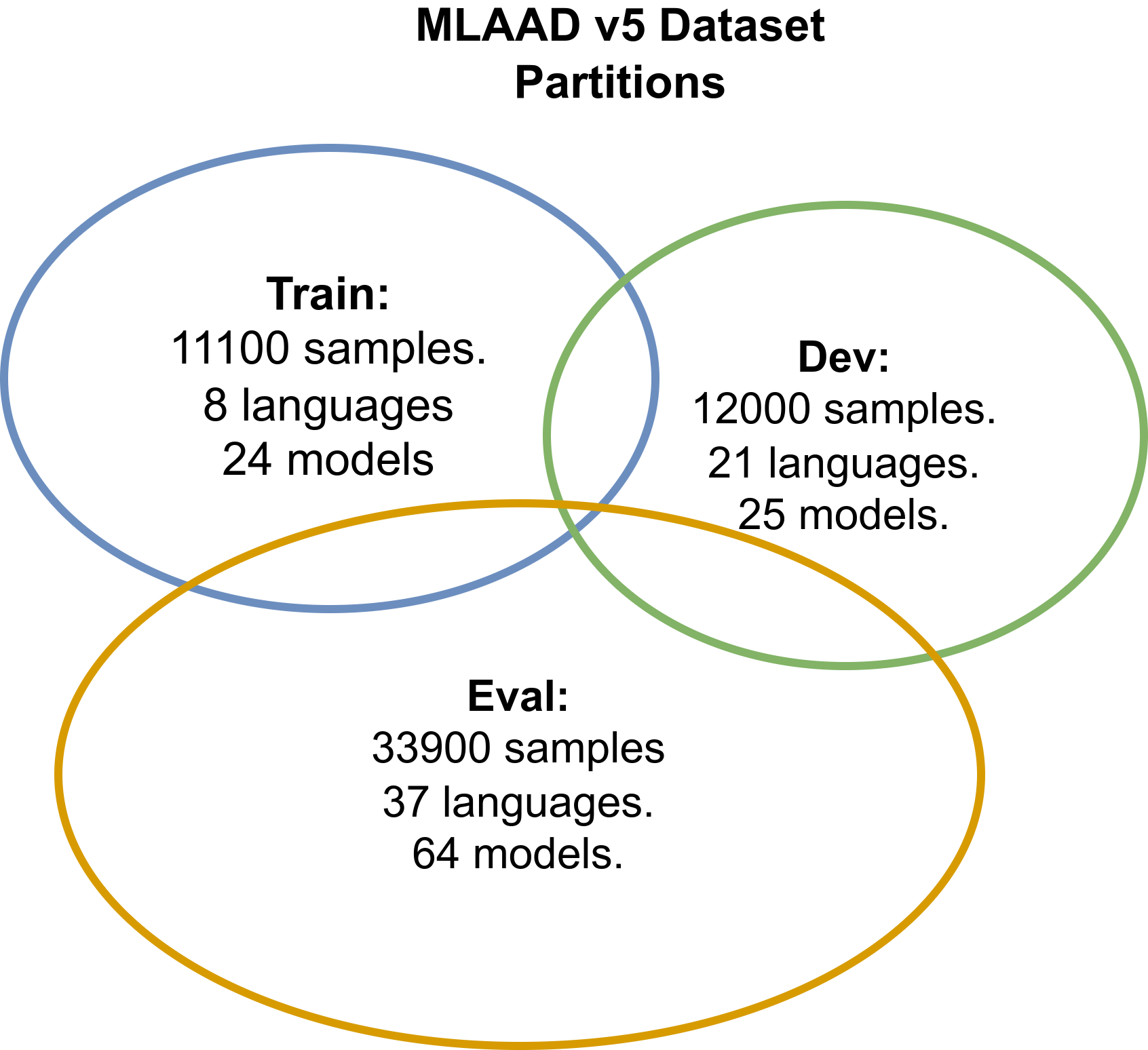

This page outlines the partitioning of MLAAD for use in source tracing. The dataset is divided into training, development, and evaluation subsets, along with protocols to train and evaluate models.

Downloads

- Protocols (list of files for train, dev, and eval): Download mlaad_for_sourcetracing.zip

- The MLAAD dataset: Download and more information

- A reference implementation, demonstrating how to load data and train a model. Feel free to adapt it for your needs. Reference implementation .

Note: Use of the MLAAD dataset for the Interspeech 2025 Special Session is optional. Researchers may use any publicly available datasets or metrics for submissions. Papers introducing new metrics for source tracing are highly encouraged.